Keyword research plays a pivotal role in Google Ads (formerly known as Google AdWords). When you’re creating and optimising Google Ads campaigns, keyword research is a fundamental step. After all, knowing the right keywords to include can make the difference between a successful ad campaign and one that falls flat.

Here are the many ways that keyword research is related to Google Ads.

Keyword research is the foundation of any Google Ads campaign. You start by selecting relevant keywords that align with your products, services, or goals. These keywords trigger your ads to appear when users search for those terms on Google.

The next step is using keyword research tools to identify the most appropriate and effective keywords for your ad campaigns. The goal is to choose keywords that are not only relevant but also have sufficient search volume to reach your target audience. The more people searching and finding your business via the right keywords will improve your chances of ranking well.

It might sound counterintuitive, but negative keywords can help improve your search ranking. As well as selecting target keywords, researching and incorporating negative keywords — the terms you don’t wish to appear for — can help to filter out irrelevant traffic and prevent wasted ad spend.

The competitiveness and cost of keywords play a role in bid strategy. Keyword research helps you understand the bidding landscape and set appropriate bids to achieve your advertising goals.

The relevance of the chosen keywords to ads and landing pages is a key factor in Google’s Quality Score. Higher Quality Scores can result in better ad positioning and lower costs. Remember, effective keyword research ensures alignment and relevance.

Keyword research influences the ad copy you create and the content on your landing pages. Your ads should match the search intent of the keywords you’re targeting to improve click-through rates and conversions.

Google Ads allows different keyword match types, including broad match, phrase match, exact match, and broad match modifier. Keyword research helps decide which match types to use based on campaign objectives.

Keyword research may reveal valuable long-tail keywords that have lower competition and can be cost-effective for reaching a highly targeted audience. These long-tail keywords can be short phrases rather than individual words and may match your customer’s frequently asked questions.

Things change all the time online, and ongoing keyword research can help discover new keywords to expand campaigns and reach more potential customers.

By monitoring Google Ads campaigns, you can make data-driven decisions based on the performance of chosen keywords. Adjusting bids, creating new ad groups, or pausing underperforming keywords based on the data you gather.

Our Google Ads blog series will keep you updated on all the latest changes in Google Ads. Follow the blog for more information and posts.

Right now, an estimated 328.77 million terabytes of data are created every day. And those terabytes soon add up — in 2023, there were 120 zettabytes of data generated around the world. And vast quantities of data is user-generated with video accounting for more than half of all internet data traffic.

With the data market estimated to be worth $400 billion by 2030, what changes can we expect to see in the next few years? The next three factors will have a large impact on the future of data.

How will big data change? Well, it’s going to get bigger. A lot bigger. Machine learning and GenAI tools are showing no signs of slowing down and they need to be trained on ever larger and more up-to-date data sets to increase their knowledge. These data sets help to spot patterns and inform decision-making. With personalisation set to be a major trend this year and into the future, big data and GenAI may increasingly be used to tailor targeted advertising, create user-focused online journeys, and refine customer service sequences.

The constantly evolving and hugely interconnected Internet of Things (IoT) will be another creator of vast amounts of data. IoT connections are predicted to hit 38 billion by 2030, with most of the focus on smart home and buildings technologies. There will be more IoT devices in your home than you might think, including smart alarms, temperature sensors, speakers, cameras and maybe even door locks. The latest generation of cars are effectively IoT devices reporting every action back to their creator. Each device will capture data, and with future GenAI integrations, the opportunities for this interconnected data to improve people’s quality of life and improve efficiencies are huge. When it comes to analysing that data, the opportunities for further personalisation are infinite.

Google Analytics 4, or GA4 launched in 2020 and replaced Universal Analytics in 2023.

While GA4 can’t currently migrate existing data from Universal Analytics, the quality and quantity of data it can deliver can be hugely beneficial for businesses looking to find out more information on the effectiveness of content, on website traffic, conversion rates and more. With a wealth of audience data at your fingertips, you can tailor your website and marketing content for maximum results. This better data will allow you to make better data decisions, and keep pace with customer expectations.

While having all the data in the world sounds appealing, you need to focus your efforts on the parts that matter most while maintaining its safety and relevance. Whether that’s audience data, consumer insights data, website traffic, or something else, only you know what is most important for your business.

If you need to catch up on any blogs in our data series, you can go back to the beginning and read more. Make sure you’re following the Infotex blog for more insights throughout the year.

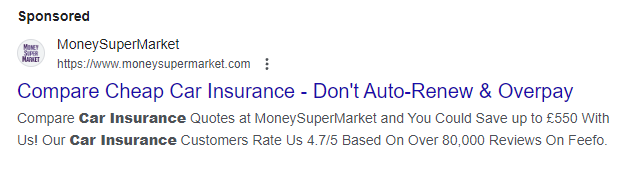

Google Ads is an online advertising platform provided by Google that allows businesses and advertisers to display their ads on Google’s search engine results pages (SERPs), websites within the Google Display Network, YouTube, and various other Google partner sites. It is a pay-per-click (PPC) advertising system where advertisers bid on keywords and pay for their ads when users click on them.

Responsive search ads let you create an ad that adapts to show more relevant messages to your customers. Enter multiple headlines and descriptions when creating a responsive search ad, and over time, Google Ads automatically tests different combinations and learns which combinations perform best.

Performance Max is a new goal-based campaign type that allows performance advertisers to access all of their Google Ads inventory from a single campaign. It’s designed to complement your keyword-based Search campaigns to help you find more converting customers across all of Google’s channels like YouTube, Display, Search, Discover, Gmail and Maps.

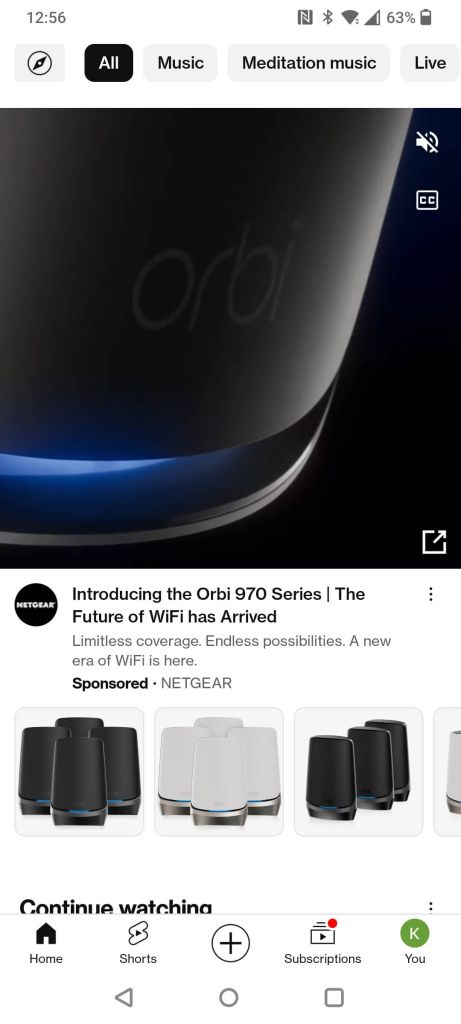

Discovery ads appear in places where people are most likely researching products or watching product reviews including the YouTube homepage for example. Google users can opt out of the data that’s tracked to put together targeted Discovery Ads, but most people don’t and Google claims it can reach up to 3 billion users. Google does this by tracking users:

A display ad is a type of online advertisement that displays images or videos that appear across millions of websites worldwide that are part of Google’s Display Network, e.g YouTube, appearing on third-party websites.

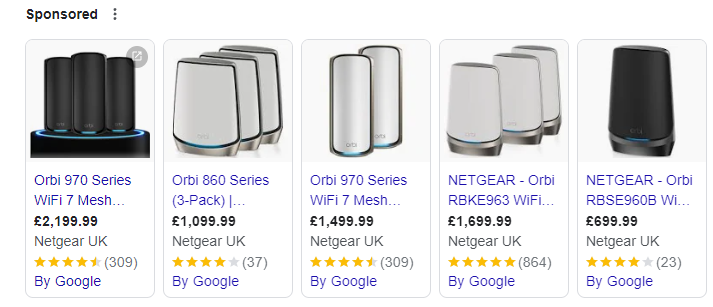

Shopping ads use your product catalogue to showcase e-commerce optimised ads across the Google Search and Display networks. Google’s automatic targeting shows what it thinks is the most relevant product for a user’s search Google shows product listings – both ads & organic results in an e-commerce inspired layout that’s easy to browse, click through, and buy. Example:

This article is part of our blog series, Websites 101, lightly introducing and explaining important topics on everything to do with websites, including design, digital marketing, software, infrastructure and beyond.

If you have a question you want answered as part of the Websites 101 blog series? Get in touch to let us know.

We’ve been exploring some of the most commonly asked questions and the areas of data that can feel confusing. When it comes to data, one law that everyone must obey is GDPR. But what is the GDPR, how does it affect businesses in the UK and how do you know if your data is safe?

GDPR, or the General Data Protection Regulation, is a European Union law that affects the way data is stored and shared across the EU. Despite the UK leaving the EU, GDPR still applies to all businesses trading in the UK. The main aspects of UK GDPR you need to consider when you’re working online and sharing information is anything that involves processing and storing personal data. Customer personal information includes their names, email addresses, physical addresses, and any other personally identifiable information, or PII. Handling personal data is a big responsibility for any business, and since GDPR was first introduced in 2018, the way businesses handle and store PII has changed for the better.

GDPR is based on seven principles of data protection and eight data privacy rights for customers. The principles of data protection:

And the 8 privacy rights:

These principles and customer rights combine to make a powerful framework for storing and handling data, designed to keep everyone safe and everyone’s personal data secure from hacks and cyber attacks. With email and phishing scams on the rise, data must be kept safe to ensure customer trust. Some of the most common questions we are asked as an online business are about personal data, and how it is stored. Here are just a few of them.

Most personal data is stored like any other data: either digitally on a local or cloud drive, and sometimes physically on paper. Online storage options like OneDrive or Google Drive are common cloud applications being used all over the world and are popular due to their ease of access and user-friendly nature. The cloud data is then held in data centres and protected by robust cybersecurity principles.

There is no single answer to this because security is always about the weakest link. Ask yourself if the website is requesting excessive amounts of personal data and review its privacy policy to understand how any data you do share is going to be stored, processed, and more importantly who they are going to share it with.

We don’t recommend forwarding emails that contain personal information or data. You need consent from the original sender, and if you’re still not sure then consider removing the personal data before forwarding.

While forwarding an email is not illegal, mishandling any personal data within it is in breach of GDPR and can result in hefty fines — even if you didn’t mean to. Some companies choose end-to-end email encryption to make sure everything sent within and outside the company is safe — check out our previous blog all about cybersecurity to find out more about encryption.

Have you conducted a Data Protection Impact Assessment (DPIA) on the data you are storing? The outcome of that assessment will often help to define any additional compliance requirements. The UK Government’s appointed Information Commissioner’s Office offers a quiz and checklist which are a great starting point. You will need to pay special attention to what the ICO identifies as ‘special category data’, this includes, among other things, personal data about an individual’s racial or ethnic origin, their sexual orientation, or data about their health. Special category data requires that you meet some specific conditions before processing it and you will need to keep records and consider any risks associated with processing it before you do so.

We take your privacy seriously and only collect what we need to get in touch with you, such as your name and email address. In fact, those details never leave the immediate business and can be deleted or anonymised if you ask us to remove your details. We have a comprehensive privacy policy that covers what personal information we collect from you and why. We know that it’s the little things that matter — like knowing your data is in safe hands when you work with Infotex.

For more information on how we look after your data, check out our privacy policy and revisit past blogs on cybersecurity and cloud storage.

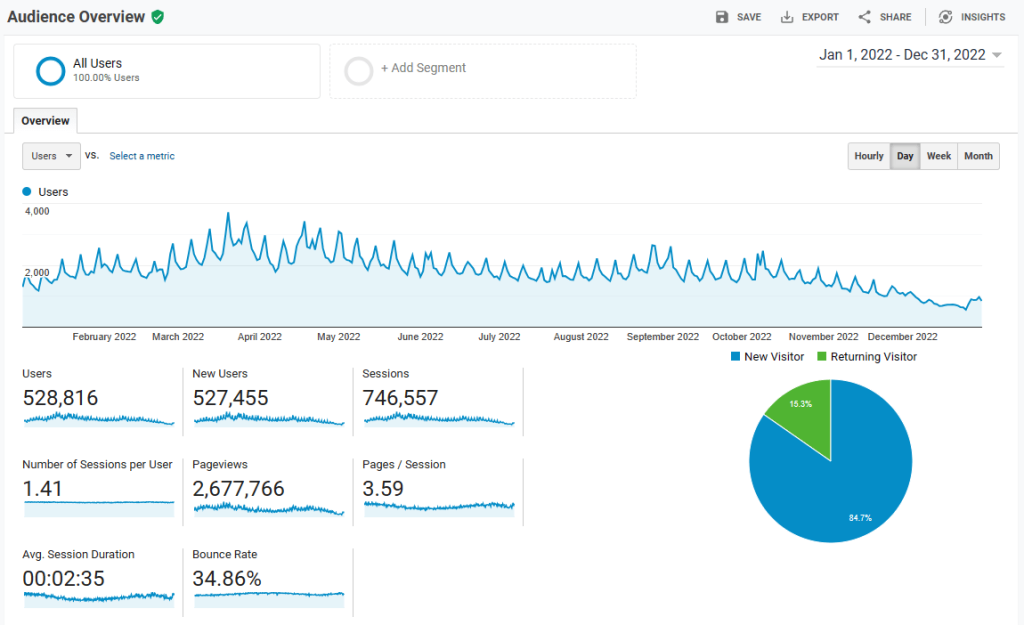

Last year the “old” Google Analytics – Universal Analytics or UA – stopped collecting any more data. From 1st July 2024, they will no longer provide access to the old data and will be deleting it from their system.

Collecting website statistics allows you to be able to compare different date ranges and see how behaviour on the website has changed over time – are you getting more visitors now, are they visiting different pages than before etc.

With the removal of UA data, unless you take action, you’ll only be able to go as far back as the data you have in the latest version of Analytics – Google Analytics 4.

Firstly, do you really need it? Is retaining website activity that is multiple years old actually useful, or is it just going to sit in a batch of files somewhere unviewed and unloved? Exact like for like comparisons between UA and GA4 aren’t possible, and the disruptions of the pandemic would also mean issues comparing like with like over multiple years.

If you do want to keep it, decide what data is important for you. UA held a huge amount of information that the vast majority of users never viewed. As a basic starter, for most people important information was how many site visitors, how they found your site, and which pages were viewed. But is knowing which countries people visited you from or changes in mobile vs desktop access also important?

You should also consider how granular you need the data – this could be monthly for something like site visitors, but for country visits it could be annual.

Lastly, how will you want to use that data in the future. E.g. would a PDF of a graph of visitors per month be OK, or is it something you want to regularly compare to more recent data? If the latter you’ll want the data in a spreadsheet so you can run your own analysis.

This is going to depend on exactly what you want to keep, but for a basic overview of site performance it will be exporting to multiple PDF files.

To do this, log in to your Google Analytics account, and go to Audience: Overview. Select the required date range at the top right. You may want to export multiple of these reports, one for each year. Select Export : PDF to create your file.

We’d recommend repeating this process for the Acquisition: All Traffic: Channels, and Source / Medium reports and the Behaviour: Site Content: All Pages and Landing Pages reports.

This will give a basic overview of site performance and behaviour per year. You may want to increase the number of rows shown to give greater insight (especially for page data) as 10 can be quite limiting.

![]()

NHS organisations rightly place a high level of importance on maintaining the security and integrity of the data within their systems. As a supplier of systems to NHS organisations we take a similar view of the importance of data security.

Within the context of the NHS, and those working on behalf of it, the framework for handling information securely and confidentially is known as Information Governance (IG). IG allows organisations and individuals to manage patient, personal and sensitive information legally, securely, efficiently and effectively in order to deliver the best possible healthcare and services. We tend to think of data security as relating to electronic information but with so much NHS information still paper-based, any IG practices must also consider the safety and security of paper records.

Information Governance covers system and process management, records management, data quality and data protection. It also should encompass the controls needed to ensure that if information is shared either internally or externally to the organisation then that is done securely while maintaining confidentiality and that any sharing observes the needs of both the organisation providing services and the people it serves.

The legal framework governing the use of personal confidential data in healthcare is complex. It includes:

To verify our awareness of, and compliance with NHS Information Governance standards the Infotex Systems team annually completes the Data Security and Protection (DSP) Toolkit, formerly known as the Information Governance toolkit. This is an online self-assessment tool that any organisation with access to NHS patient data and systems must complete. In fact, we exceeded the standards required for our 2022/23 assessment. (2023-24 is due to start very soon). The DSP Toolkit is similar to the Cyber Essentials Plus accreditation also held by Infotex, but has a specific focus on requirements for NHS organisations.

The DSP Toolkit measures an organisation against 10 data security standards defined by the National Data Guardian. The standards cover a wide range of data security topics including the importance of ensuring that personal confidential data is handled, stored and transmitted securely, enforcing regular staff training and ensuring that staff have the appropriate level of access to data for their role based on the principle of least privilege. At the end of the self-assessment process, an organisation then has its compliance data published on the DSP Toolkit website to show how they are complying with the 10 standards.

As well as maintaining our processes and procedures to comply with NHS requirements the Infotex Systems team ensures that any systems that we build and deliver also follow these same standards. We apply the same standards to systems we build for both NHS and non-NHS customers to ensure the highest standards of information security for all of our customers.

What do you do if you want more control over the front-end but still benefit from the content management capabilities of WordPress? This is where the “headless” approach comes in.

WordPress is a full-featured content management system that enables the creation of standalone websites. That is, a website built on a single codebase with tight integration between all parts of the system (front-end and back-end). Because of this, WordPress can offer rapid development through the use of plugins which can affect both the administration side of the website and the presentation side. The caveat to this is that to gain these benefits, you must write a theme that adheres to the WordPress specification.

Headless refers to the separation of the backend (content storage) from the front-end (dealing with the presentation). A headless CMS allows you to create, edit, and store content but has no way to display that content on its own. The headless CMS will also have an API (Application Programming Interface) that defines how other systems are able to communicate with it in order to access the content.

WordPress does not have a headless mode per se but this can be achieved by hosting WordPress on a domain (or subdomain such as cms.yourwebsite.com), creating a blank theme, and redirecting traffic from the WordPress site to somewhere else (for example, the frontend domain).

In doing this you will have the ability to use the WordPress CMS to create content but it will not be visible anywhere.

There are numerous frontend frameworks available today, written in any number of languages. You could even write the whole website front-end from scratch without a framework (using pure HTML, CSS & JavaScript). However, for its speed, ease of use, and strong development community, our preference is to use NextJS. NextJS is a ReactJS-based front-end framework with lots of modern features like routing, server-side rendering, and static generation. All of these aid in building a website quickly with a focus on performance.

WordPress provides, what is known as, a REST API out-of-the-box which it is perfectly possible to use to fetch posts, pages, tags etc from the WordPress CMS. To gain a bit more control, we can use GraphQL which is a query language for APIs and allows us to dictate what data to return in our API request. This results in faster responses and better privacy (due to unnecessary data being excluded).

There are many approaches to building a modern website and we believe strongly in the capabilities offered by a well-configured WordPress CMS with a well-built integrated theme. For the majority of projects, this can take you a long way and offers compelling value. However, for some projects, or clients, the flexibility offered by the headless approach can produce a fast, scalable solution whilst still leveraging the power of a CMS such as WordPress.

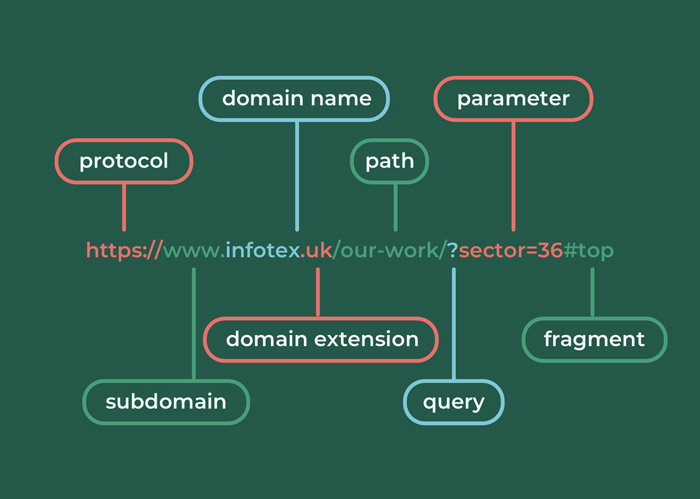

We are all familiar with website addresses (called URLs formally) e.g. https://www.infotex.uk/blog/?cat=1#top

Have you ever wondered what that actually means?

In this article we’re going to take one apart and explain each component.

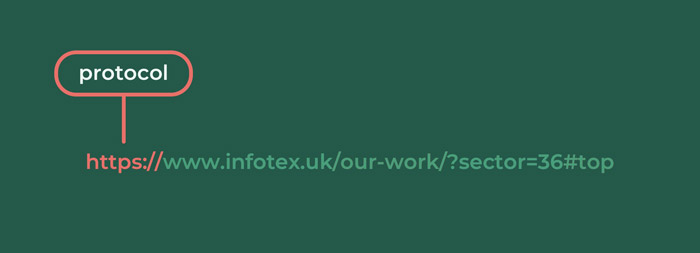

https://

https:// is the protocol segment of a URL. Taken literally it means hyper text transfer protocol secure

Contrary to some belief that the web is an entirely American invention, HTTP as a protocol was invented by Britain’s own Sir Tim Berners Lee and his team back in 1989 as a very simple, lightweight protocol for transferring simple textual documents with minimal formatting (hence the hyper text name) across a network of computers.

There are other protocols which may be seen around the Internet, examples are mailto (email), ftp (file transfer protocol), tel (telephone number).

At the time of the web’s inception, it was inconceivable to think that it would be used for purposes requiring security as it was designed to allow academics to share papers. Also consider that back in the 1980’s encryption carried a massive processing overhead and legal challenges so it was simply ignored and some of the architects of these early protocols have since said that this was the biggest mistake made at the time.

The s of https stands for secure and was formally specified by Netscape in 2000 as part of the Secure Sockets Layer (SSL) that Netscape Navigator pioneered although its use didn’t become ubiquitous until around 15 years later having overcome a number of legal challenges such as the USA initially deeming encryption to be a munition thus illegal to export without licence, indeed encryption officially still requires a government licence for use in China today!

Many browsers now hide the protocol to simplify the view for users considering it to be superfluous nowadays.

HTTP has indeed transformed enormously from those early days as it has evolved through version 1.0 to 1.1 (which allowed multiple files to be downloaded back-to-back) and latterly version 2 (which allowed multiple files to be downloaded concurrently) with version 3 just starting to reach production, removing many of the low level performance bottlenecks.

www.

By standard deviation most sites on the world wide web start with www. although from a purely technical reason this could be anything you like and you may see some sites use multiple subdomains for e.g. https://shop.

This section is often called a subdomain as it has been delegated by the domain (see next entry) as a child of itself and this subdomain is actually a DNS record or host name that at the most basic level is used to convert a friendly name into a server’s IP address. By passing through the subdomain and domain as part of the HTTP request it allows the server to identity which site and SSL certificate should be served to the viewer.

There is indeed some new technology on the horizon called “encrypted client hello” which will allow even this to be encrypted to avoid third parties even knowing what subdomain you’re visiting.

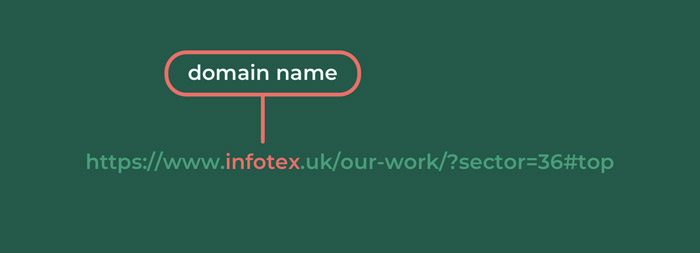

infotex

We have talked about domains before (https://www.infotex.uk/guide-domain-names/) but in short a domain is the part which you can personalise by choosing a combination of letters and numbers (and limited symbols like -) to use to distinguish your business. Domain rights are rented by the year typically 1-9 years at a time and the price for that rent depends on the domain extension (see below) with some premium extensions such as .xxx costing over £100 per domain per year.

DNS (Domain Name System) operates primarily at the domain name level allowing you to set nameservers, these control the records used to “point” a domain name to a server’s IP address(es), email server or similar by adding host records.

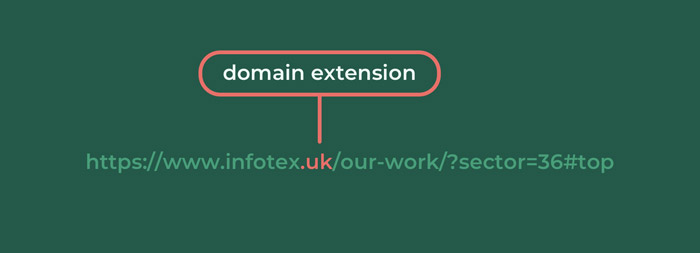

.uk

All domain names have an extension which is basically the top level of the DNS component.

At a technical level all .uk domains (for example) trigger a lookup to the governing body of .uk who will in turn supply the nameservers for the domain lookup (as mentioned above).

A lookup for www.infotex.uk is actually performed as 3 (or more) separate lookups because your PC will initially lookup who controls .uk, it will then turn to their server and ask who controls .infotex and then turn to their server and ask what server www refers to.

Much of this information is of course stored in memory of your PC or ISP’s DNS server in reality to avoid repeated time consuming lookups having to go around the world.

Domains were traditionally either Generic Top Level Domains (GTLD’s) such as .com / .net / .org or Country Code Top Level Domains (CCTLD’s) such as .co.uk / .fr / .eu

In recent years the governing body ICANN have opened up the market allowing businesses to setup registries under their chosen name, for e.g you can use the .bentley top level domain to find out more about the premium car maker Bentley although the cost and requirements for doing so rule out all but the biggest of businesses like Bentley, Amazon and Google.

In the UK the government has charged an independent body called Nominet with the task of overseeing all .uk domain names, Infotex are proud to be members and tag holders at Nominet meaning that we are able to take direct control of your .uk domain rather than having to refer to third parties, we are also able to help influence some of the policies around how these are overseen.

Infotex are naturally also able to register and control most other classes of domain such as .com’s for our clients.

Different domain extensions have different rules so, for example, to register a .eu domain you must provide details of your office at a location within the eu (which of course excludes the UK now).

When you see mentions of .to or .ly etc which are today commonly used in shortcuts it can be fun to ask yourself what country you are actually looking at (hint .to is Tonga and .ly is Libya)

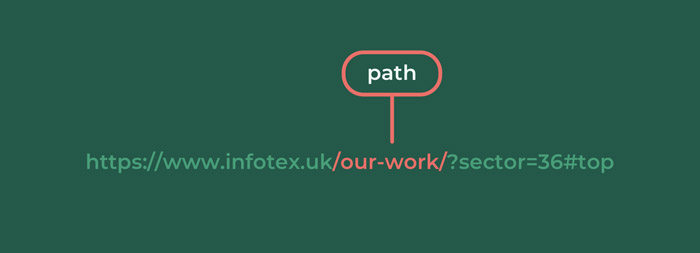

/our-work/

This optional component is commonly used as the page or part of a site that you are viewing. Traditionally it would contain a filename extension such as .html to indicate that the response is Hyper Text Markup Language to be rendered by the viewers browser although nowadays while still present in the code, in many cases the extension is now dropped from display and all content returns an invisible header containing the same data encoded as a “MIME” type to offer more flexibility and be more user friendly.

The list of valid paths are set by the site owner and can even include unicode characters to represent foreign letters rather than being restricted to the traditional a-z format.

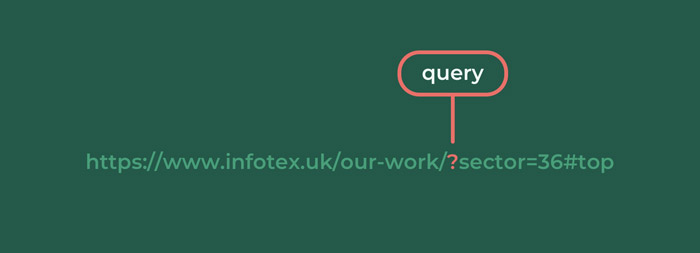

?

With the advent of “friendly URLs” as above, these are seen less often but many path segments (see above) that you enter today are actually converted into query(strings) by the web server without the user even realising.

A querystring is made up from sets of parameter pairs (see below) and when combined with the ? to identify their start forms the query. This is an optional extra component intended to be a user controlled component allowing users to provide a custom combination of keys and values that help to customise the display of the page, typically used in conjunction with server-side code to interpret their meaning.

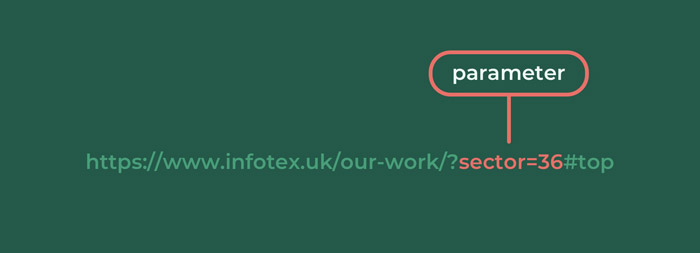

sector=36

In the case of our website’s case studies, sector=36 is used to identify that you wish to filter to see just our ecommerce work, change that value 36 to 40 and you’ll see all our articles about B2B clients.

Parameters work in key & value pairs. A URL can have multiple parameter sets but each must have a unique key (sector in this case) and when there are multiple they are separated by an & (e.g. sector=36&q=design).

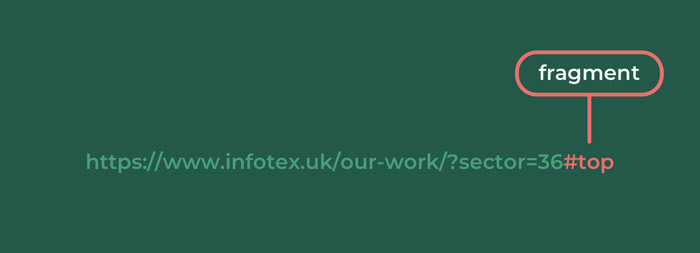

#top

The final part of a URL which is again optional allows the user to designate which part of a page they wish to see. Going back to the foundation of the web when viewing lengthy academic papers it was helpful for a reader to be able to use these like a bookmark to quickly get to chapter 10 (for example) and entering the fragment triggers the browser to scroll to that part of the page.

In modern usage it can also be a trigger for interactive events such as show and hide a page element (the answer to an FAQ for example).

So now that you know what makes up a URL when you’re next navigating a site you will have a much better understanding of the “magic” that your computer is doing behind the scenes to provide you with the information at the click of your mouse or tap of your mobile screen as it’s pretty impressive really and the result of decades of research and evolution.

Big or small, data takes up valuable storage space. And once physical storage like hard drives are full, all that data needs to be stored somewhere. This is where the cloud and cloud storage solutions come in. It’s online, on-demand and on the rise globally — so what is the cloud?

The cloud isn’t a place, but a name for the collective online, on-demand availability of services and applications. A global network of remote servers supports and hosts the cloud and allows access to storage wherever you are in the world. Tools like Microsoft OneDrive, Google Drive & Dropbox are great examples of cloud data storage that you’re possibly using already. However, systems exist in the cloud to make processing this data easier — systems such as Software as a Service (Saas) applications, like Zoom, Microsoft 365 or Google Workspace.

Such systems can be split into categories:

Traditional servers are bulky, take up physical space and need advanced cooling, leading to a bigger electricity bill as well as more square footage in your business. By switching to cloud computing and storage, you can save space and money while minimising your environmental impact. But where is the cloud, exactly? Your data is held in one or more data centres, and there are many different ones owned by large companies around the world. Google uses 40 data centre regions globally, Microsoft uses more than 200 centres as of 2023 but Cloudflare has a presence in over 300. The quantity and availability of data centres help the cloud to work as a fast, reliable tool for data storage and processing around the world.

Holding their share of the world’s online data is a big responsibility for all data centres. And for business customers, it’s crucial to know where that data is held and how it is used. We’ve explored cybersecurity in a previous blog, and some of these measures also apply to protecting the cloud. But what specific protections exist to keep the cloud safe? In the UK, the government’s National Cyber Security Centre (NCSC) explains 14 distinct cloud security principles, including:

These security principles give an easy-to-follow framework for any businesses using, accessing or storing data in the cloud. Encrypting data is another huge security aspect for anyone using cloud storage.

The cloud is infinitely flexible and provides businesses with a scalable model for data storage. So even if your needs change, there is always enough data storage in the cloud. As long as you have a reliable internet connection, with appropriate encryption and authentication to keep customer data safe, the opportunities are almost endless.

Keep following our easy data series for more insights.

Data and keeping it safe go hand-in-hand. And today, more of the world is online and more data is being created than ever before. In the second blog in our series covering all things data, we’re looking at the world of cybersecurity and discovering the impact it has on us all. Whether your data is big or small, national, international, business-specific, or personal, cybersecurity is an area that helps to keep everyone safe.

The classic image in the movies is of cybersecurity defenders and attackers playing cat and mouse in front of screens with rapidly scrolling text. While there is a degree of truth to this image, cybersecurity is a much broader sphere and one that we all have a role to play within.

There are many different programmes and tools under the rapidly expanding cybersecurity umbrella. In the UK, the government’s National Cyber Security Centre (NCSC) defines cybersecurity as “how individuals and organisations reduce the risk of cyber attack.” Cybersecurity is as much a mindset as it is a collection of tools used to achieve it.

When we talk about protecting data, this naturally includes ensuring the protection of the devices and the services we all use to process that data. Most tech users will find their data is spread between smartphones, laptops, desktops and servers making it hard to keep track of. This is all against the uneven playing field where an attacker only needs to find one weakness in any of those to be successful, whereas the defender needs to keep every one of them 100% secure all the time.

According to IBM Security, the average cost of a data breach in the UK is £3.2 million, proving that data — and the cost to put a breach right afterwards — is a highly valuable commodity and a huge responsibility to hold. You only have to search for high-profile cyber attacks and you can quickly find news of organisations of all kinds and sizes falling victim to cybercrime. Ransomware can attack the smallest organisation or the largest government body, and they each risk being held liable for large sums of money payable both to the attacker and to put right any harm done to the individuals whose data was leaked so it’s easy to see why cybersecurity is so crucial.

It’s not only the cost factor that makes data breaches so dangerous — your brand is on the line. Personal data is essentially a currency for brands and businesses, given in exchange for trust. And when that trust is broken, it can be hard to regain and can affect your business for a much longer period than resolving the actual breach.

Right now, it’s estimated that 328.77 million terabytes of data are produced every single day. Customers trust organisations with their personal information, also called personally identifiable information, or PII. Think of everything you share with an online retailer when you purchase from them, all that data adds up to a lot of personal information. Your name, address, financial details and possibly more are all held on servers for them, and it’s the retailer’s responsibility to keep that data safe. By exposing that data to risk, it threatens both the individual’s privacy and the retailer’s livelihood.

And it’s not just online retailers that need to consider the risks of holding personal information. All businesses have a legal and ethical responsibility to protect their customers’, and employees, data. Organisations handling more sensitive information such as national security and health matters must ensure particularly strong data protection measures are in place. On a smaller but still crucial scale, solicitors, for example, can be hit by cyber attacks that prevent housing transactions from completing, having a real-world impact on people’s lives. The number of different kinds of data is countless, but it must all be protected.

What are the themes to watch? It depends on your industry, as the types of programmes and systems available are always changing and improving. Whether it’s technology such as firewalls, regulation such as GDPR or more, here are some trends to watch.

Keep following our blog series for more updates on the future of data, web development and more.

Updated February 2024

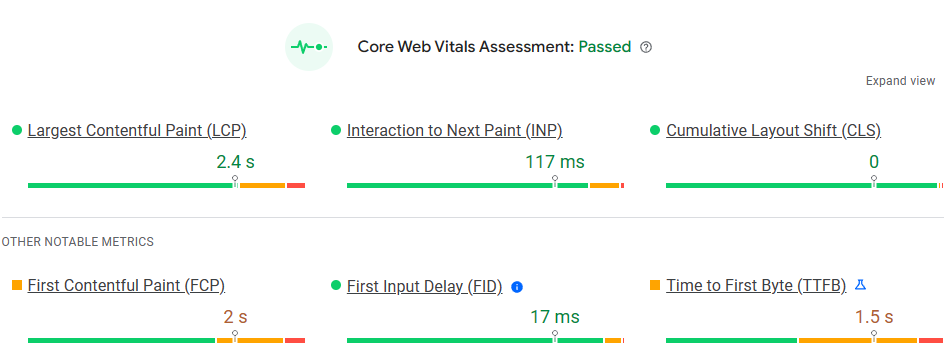

Google uses the page experience as part of their ranking algorithm, making it yet another factor to keep on top of to achieve a high position in the search results.

Google have grouped these under the title of Core Web Vitals, to provide a snapshot health-check of your site – but crucially it can use real visitor data.

Core Web Vitals are metrics introduced by Google to measure real-world usage of a visitor’s experience on a webpage. They comprise three primary measurements that are used to calculate the speed of the page and user interaction.

The Core Web Vitals are:

Not exactly snappy titles and not immediately obvious what they are. Strap in, here we go:

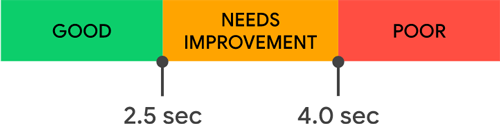

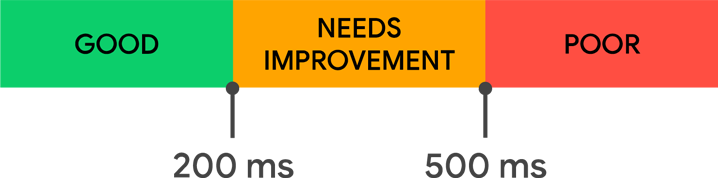

Largest Contentful Paint is simply the measurement of how long it takes a page-view to load for a real site user. So, from the point of clicking a link to seeing the site on your screen, Google tells us this should happen in under 2.5 seconds.

To analyse LCP, Google takes the largest content piece (be it text, video or image) to appear on the display. As the page changes in loading, Google shifts to the new main element. It progresses until when the page loads fully or the user starts interacting with the page. There are several elements that that can impact the loading speed, but the main factors for enhancing LCP include:

LCP is primarily going to be impacted by how your site was built in the first place, or what content you’ve added to it since, but from a site owner’s point of view making sure that images are correctly compressed for web use is important, either before uploading to the site or making sure the site itself is applying compression.

Cumulative Layout Shift calculates the visual stability of a page as it loads. Layout shift, also known as ‘layout jank’, appears when the information on the page keeps moving around despite the page appearing loaded. This is annoying, and can sometimes result in a user clicking the incorrect link.

The CLS score is calculated through multiplication of the display part that changed when loading by the interval it moved. Google’s recommendation is a score under 0.1, so only minimal elements move as the page loads.

Again, layout shift is going to be mainly influenced by how the site was originally built.

Largest Contentful Paint measures if the page is viewable. First Input Delay measures whether a user can interact with the page, such as clicking a link. Often a page can be viewable, but due to the loading of other items happening off-screen you can’t interact with the page.

Longer input delays tend to happen when the page is in the loading process and part of the content is already viewable but expecting to be interactive, since the browser is fully engaged in loading the remaining part of the page. The primary effort on developing FID is concentrated on quicker page loading.

Google rates a score under 100 milliseconds as Good for FID.

Implementing a site cache (such as Cloudflare) and reviewing slow 3rd party JavaScript can rapidly reduce your first input delay score.

From March 2024, Google will be using Interaction to Next Paint (INP) instead of FID (First Input Delay). INP assesses the responsiveness of using a webpage, such as clicking or a key press, and how long it takes for the user interface to update. When an interaction causes a page to become unresponsive, that is a poor user experience. INP observes the latency of all interactions a user has made with the page, and reports a single value which all (or nearly all) interactions were below. A low INP means the page was consistently able to respond quickly to all—or the vast majority—of user interactions.

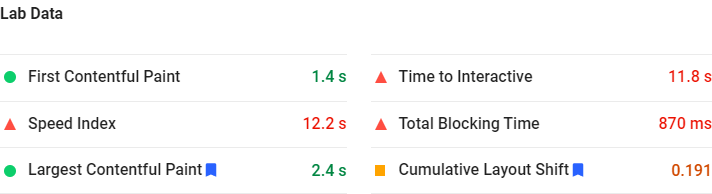

PageSpeed Insights

The easiest way to view your vitals is via Google’s PageSpeed Insights. In the results, some sites will have a Field Data section, but this is usually only for larger sites.

If Field Data isn’t shown, you can still see a snapshot underneath from Lab Data. Note that running the report multiple times for the same page can get different results.

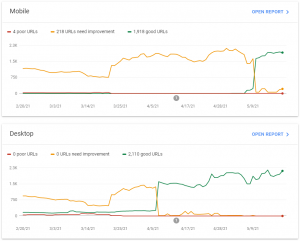

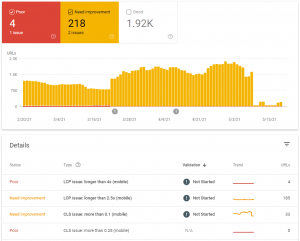

Google Search Console

For a more detailed view of Core Web Vitals, you will need to access Google Search Console. This will provide a graph of the number of pages with issues or improvements required over time, split between mobile and desktop.

Selecting the Open Report against these graphs then allows you to see what the issues relate to. Selecting any of the Types in the details section will show which pages are affected.

Google uses PageSpeed Insights as a tool for testing website performance.

A Google PageSpeed Score typically ranges from 0 to 100 with a higher score indicating better performance. The score is calculated based on how a page performs in terms of various performance metrics. The specific algorithm and weightings may change over time, but it generally takes into account factors like page load times, content rendering, and interactivity.

The PageSpeed Score is often linked to the user experience. A higher score usually correlates to faster loading and better user experience. Google and other search engines consider page speed and user experience when ranking pages in search results, so a better score can lead to improved search engine ranking and user engagement.

An overall Speed score is calculated by looking at the categories for each metric:

We could equate Core Web Vitals to emissions tests during your car’s MOT. It’s measuring multiple areas all designed to improve the quality of the environment or in this case the internet. As Core Web Vitals are now being used by Google as part of their ranking algorithm, for those sites wishing to stay on top of their search engine positions it’s going to be important to monitor them and make sure that your site stays in tip-top condition.

Many of the world’s major sporting events take place every four years such as international football tournaments, the cricket and rugby world cups and, of course, the Olympic games. Generally the summer Olympic games take place in leap years although since an end of century year is only a leap year if it is also divisible by 400 then the year 2100 won’t be a leap year even though it is an Olympic year. The last edition of the summer Olympic games was delayed by a year due to the Coronavirus pandemic which has clearly had a major impact on the business and technology worlds over the last four years.

The pace of technological change means that over the last four years there have been huge leaps in the development of artificial intelligence. The ChatGPT service was only launched in November 2022 but has rapidly gained awareness with the general public rather than just within the technical sector as had been the case with AI previously. We’ve already written a few articles on this topic such as AI Tools we are using but if you’re using February 29th as a way of thinking about how your business approaches the next four years then take the time to think about how you could integrate some AI into your processes.

If you’re not quite ready for AI yet then perhaps you might take a step back to consider other systems within your business. Something that might have worked well for you four years ago may now be starting to get unwieldy as your business grows. Security and availability of data is crucial to the success of a business and if you started out storing data in a spreadsheet such as Microsoft Excel now might be the time to consider whether that is still the best home for your business critical data.

In general many companies look to redesign or refresh their websites every 2-3 years so if your website hasn’t changed since the last leap year then it might be worth thinking about a refresh, we’re always happy to have a conversation about how we can help with that refresh process.

This leap day we hope you get the chance to take that step back from the day to day to look at the bigger picture for your business.

Discover how our team can help you on your journey.

Talk to us today